Overview

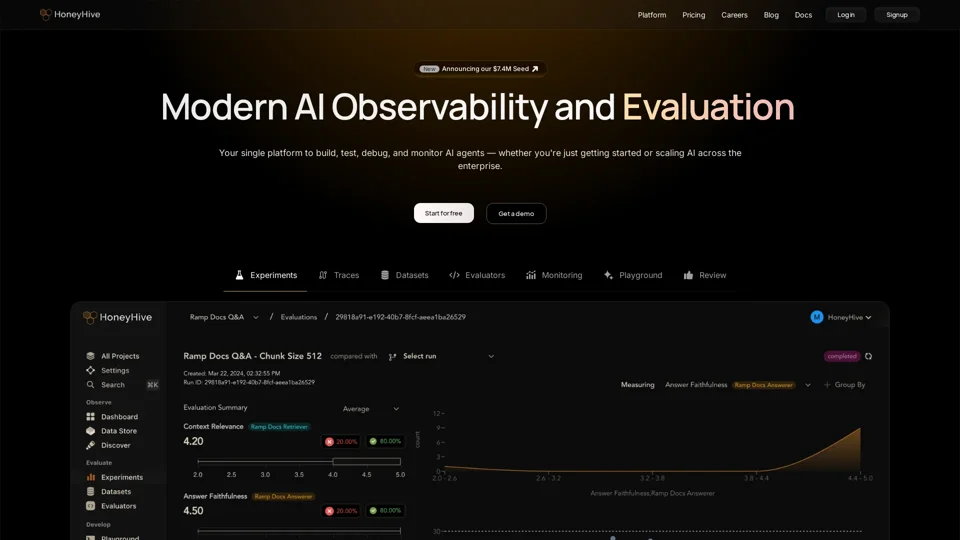

HoneyHive is an innovative AI observability and evaluation platform designed for teams developing large language model (LLM) applications. It serves engineers, product managers, and domain experts by providing tools for evaluating, testing, and monitoring their applications. HoneyHive enhances collaboration within a unified LLMOps platform, allowing users to manage prompts in a shared workspace effectively.

Product Features

- HoneyHive offers robust AI evaluation tools that help users assess the performance and accuracy of their LLM applications efficiently.

- The platform includes integrated testing functionalities that facilitate comprehensive debugging and troubleshooting of LLM failures in production.

- Teams can collaborate seamlessly within HoneyHive’s workspace, which promotes better project management and communication among stakeholders.

- Users can monitor their applications continuously, ensuring that issues are flagged and addressed promptly, thus enhancing overall application reliability.

Use Cases

- An engineering team can utilize HoneyHive to test and validate their LLM before deployment, ensuring rigorous performance standards are met.

- Product managers may leverage the observability tools to analyze user interactions and feedback within the application, guiding future development decisions.

- Domain experts can manage and refine prompts collaboratively, ensuring that outcomes align with specific business objectives and user needs.

User Benefits

- Users benefit from improved application reliability due to continuous monitoring and quick debugging capabilities.

- The collaborative environment fosters enhanced communication, ensuring that all team members are aligned and informed throughout the development process.

- Advanced testing tools reduce the time needed for validation and iteration of LLM applications, accelerating the time to market.

- Decision-making is enhanced as teams can use detailed evaluations and insights from the platform to inform strategies and improvements.

- By streamlining the development process, HoneyHive ultimately helps teams deliver high-quality applications that meet user expectations effectively.

FAQ

- What is the pricing for HoneyHive?

Pricing details are available upon request on the official platform and may vary based on features and team size. - How is user privacy handled?

HoneyHive prioritizes user privacy and employs security measures to protect sensitive data within the platform. - How do I sign up for HoneyHive?

Interested users can sign up directly on the platform's registration page by filling out the required information. - Is HoneyHive compatible with other tools?

Yes, HoneyHive is designed to integrate with various development and monitoring tools to enhance user experience. - What is the main value of using HoneyHive?

Users gain a comprehensive suite of tools that supports the complete lifecycle of LLM application development, from testing to monitoring and collaboration.